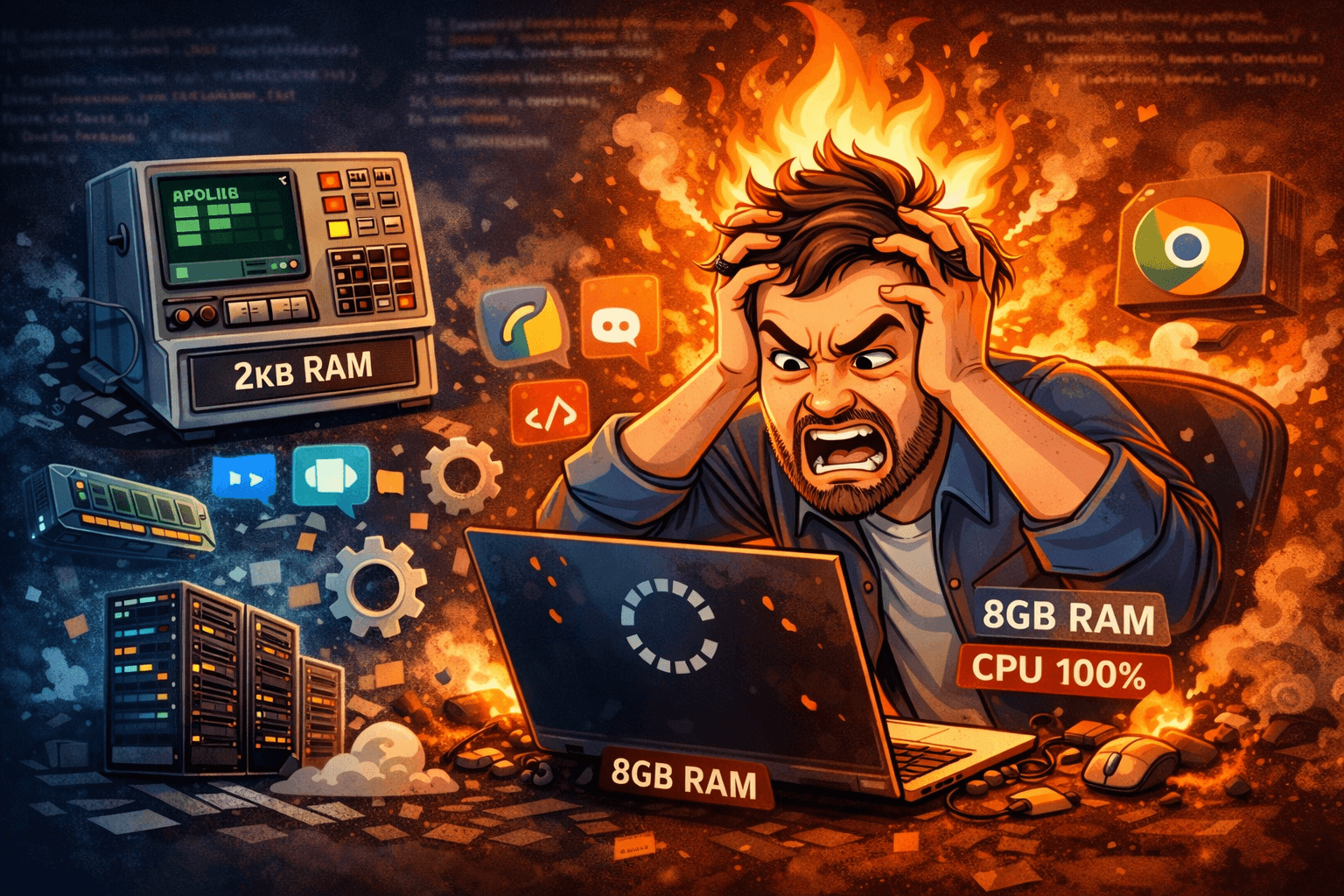

Do you think your 8GB RAM is sufficient to run multiple applications? For many users today, the answer is no. There was a time when 4GB RAM was enough to keep several programs running smoothly. But lately, systems feel sluggish even with high-end RAM and modern Intel Core CPUs. How did this happen? Was this intentional, or did something go wrong in the software development world?

To put things in perspective, the Apollo Guidance Computer (AGC) used for the Apollo Missions had only around 2KB of RAM (erasable memory) and 36KB of ROM (fixed memory). Yet today’s applications behave like RAM-hungry monsters. There are several reasons why modern software consumes so many system resources, especially memory and CPU.

User Apps vs. System Apps — and Why It Matters

Computer applications generally fall into two categories: User Applications and System Applications. When you open your Task Manager, you can clearly see these groups.

- User Applications: Apps directly used by the user (e.g., browsers, messengers, editors).

- System Applications: Services used by the OS to keep essential functions running.

When examining resource usage, it becomes obvious that User Applications consume significantly more CPU/RAM, while System Applications are relatively lightweight. Since User Apps are built on top of System Apps, understanding this relationship helps explain why many modern programs feel heavy.

When Efficiency Was the Only Option

A perfect analogy comes from early video game consoles. During childhood, many of us played games that loaded from cartridges with extremely limited memory. Storage and RAM were primitive, so programmers had to write highly optimized code just to make anything work.

A famous example is Super Mario Bros, considered a technical marvel for its time:

- The game fit within roughly 40–70KB of storage

- Used about 2KB of RAM

- Ran on a processor clocked around 2MHz

- Written in Assembly language

Due to hardware constraints, developers had no choice but to make everything lean and efficient.

The Early Internet Was Also Minimal by Design

Efficiency didn’t stop with consoles. Early internet speeds were painfully slow, often around 2Kbps for ordinary users. Websites had to be:

- Mostly text-based

- Lightweight

- Minimal to none UI

- Fast to transmit

A 10KB page could take minutes to load. UX simplicity wasn’t a design choice — it was survival.

Hardware Improved — And Software Got Heavier

As storage, RAM, and bandwidth evolved, expectations from software grew. Modern CPUs support multithreading and multiprocessing, RAM can handle rapid data exchanges, and storage is abundant.

Programming languages evolved alongside hardware. But instead of pure native code, we gained:

- Compilers and Interpreters

- Garbage Collectors

- Wrappers and Abstraction Layers

- Virtual Machines (e.g., JVM, .NET CLR)

These enable faster development and portability, but they also increase resource usage and add overhead.

From Native Apps (2013) to Cross-Platform (2025)

Between 2013 and 2025, software development changed massively. Around 2013, applications were still mostly native, tightly coupled with the operating system and its kernel APIs.

Native development had benefits:

- Better performance

- Better efficiency

- Lower overhead

- More reliable system integration

But native apps were often monolithic — everything bundled as one large application. Maintaining this was costly, especially across multiple platforms.

Cross-Platform Development Became a Necessity

Building for multiple OS platforms was painful:

- iOS → Objective-C / Swift

- Windows → C# / C++

- Android → Java / Kotlin

- React Native → JavaScript bridge for mobile

Developers often had to rewrite the same logic multiple times, raising cost and complexity. Businesses wanted better UI/UX with faster delivery, which pushed the industry toward cross-platform and web-based applications.

“Write Once, Run Everywhere” — Java’s Philosophy Returns

Sun Microsystems (creators of Java) introduced the concept of Write Once, Run Everywhere (WORE). Java used the Java Virtual Machine (JVM) to achieve this by compiling code for a virtual machine, not the actual hardware.

This approach brought convenience but also issues:

- Out Of Memory Exceptions

- JIT Compilation Overhead

- Garbage Collection Pauses

- Suboptimal Thread Management

Newer frameworks like Flutter (Dart VM) and Xamarin (.NET runtime) followed similar patterns, each with their own drawbacks such as UI inconsistencies or native integration problems.

Cloud, Web Tech & Microservices Take Over

As average internet speeds jumped from ~3Mbps to ~100Mbps and pricing dropped, cloud computing exploded. AWS, Azure, and GCP offered:

- Scalability

- Lower infrastructure cost

- Reliability

- Global deployment

This accelerated the rise of:

- Web tech (HTML, CSS, JS)

- Microservices

- Web frameworks (MERN, Ionic, ElectronJS)

Why Microservices Became Popular

Monolithic apps suffer from:

- Total failure when one subsystem crashes

- Difficulty shipping partial features

- Hard scaling and maintenance

Microservices solve these issues by isolating features into independent components, deployed and scaled separately.

Enter Electron, Chromium, and Heavy Desktop Apps

Today, almost 70%+ of browsers and 20–25% of desktop apps are Chromium-based. Chromium allows web-based apps to masquerade as desktop apps.

Frameworks like ElectronJS bundle:

- A full Chromium instance

- A Node.js runtime

This achieves cross-platform portability, but at a cost: every app brings its own mini-browser.

Examples include:

- Slack

- Discord

- WhatsApp Desktop

- Steam (partly)

- Spotify

- Figma (desktop)

- VS Code

Just one Chrome browser with a few tabs can exceed 2GB of RAM usage — now multiply that across multiple Electron apps running simultaneously.

Modern Bloatware, Telemetry & AI — The New Normal

Almost all applications now collect telemetry data: clicks, session time, device type, Wi-Fi/mobile, location, etc. This tracking adds background processes and services that consume resources.

Both Windows 10 and Windows 11 collect user data for “security” and “personalization”. Windows 11 goes further with AI-driven features like Copilot, leading to more background tasks and network calls.

Combine this with:

- Default apps

- Background sync services

- Push notification daemons

- Update checkers

…and you have modern bloatware.

On top of that, many developers now rely on AI-assisted coding, sometimes called “vibe coding”, which can lead to inefficient code or vulnerabilities that degrade system performance.

Conclusion: Convenience Over Efficiency

Software development today is easier and more convenient than ever — but the hardware cost has been pushed onto the user. Abstraction, portability, telemetry, and cross-platform support have replaced the old obsession with performance and efficiency.

The result? Machines feel outdated faster, not because hardware is weak, but because software demands have skyrocketed.

Your device might not be obsolete — it’s just being asked to do much more than before.